一个简单的爬虫的实现

学了点python

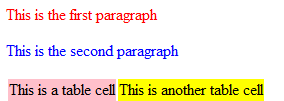

写了个简单的爬虫,没做异常处理和封装成类(只是很简单的。。。),暂时凑合着用吧,请安装python3.x版本。设置好环境变量。然后将代码保存为crawler.py,并在相同的目录下新建一个文件夹data,

在终端输入python crawler.py即可爬了。。。。数据在data文件夹内,按照文章的日期命名

结果里面有中文有英文,有标题,时间,来源,可以用来构建平行语料库。

[python]

#!/usr/bin/env python

# -*- coding:utf-8 -*-

# author:xizer00

# date:2013/04/11

# crawling the hxen.com

import urllib.request as request

import re

import io

#初始url

init_url = r'http://hxen.com/interpretation/bilingualnews/'

#总文章数目正则表达式

numofarticle_regex = b'<b>(\d+?)</b> '

#总页面数目正则表达式

numofpage_regex = b'<b>\d+?/(\d+?)</b>'

#当前索引页面的内容内容页面URL正则表达式

contenturls_regex = b'<td height="25"> <font face="Wingdings">v</font> <a href="([a-zA-Z0-9-\s\.\/]+)" target=_blank>'

#内容页面标题正则表达式

title_regex = b'<b><h1>([\s\S]*?)</h1></b>'

#title_regex = b'<b><h1>([\s\S]*)</h1></b>'

#内容页面的内容正则表达式

content1_regex = b'<p>([\s\S]*?)</p>'

#content1_regex = b'<p>([\s\S]*)<p>'

content2_regex = b'<p>([\s\w(),.`~!@#$%^&*/\\;:{}\[\]]*?)<script'

#content2_regex = b'<p>([\s\S]*)<script'

#文章来源

source_regex = b"Source: <a href='[\w:\/.\\_\s]*' target=_blank>([\s\S]*?)</a>"

#发表日期

date_regex = b'([\d-]+) '

#残留符号

junk1_regex = b'&[\w]*?;'

junk2_regex = b'<br[\s]*/>'

junk3_regex = b'<a href=http://www.hxen.com/englishlistening/易做图english/ target=_blank class=infotextkey>VOA</a>'

def crawler(url):

#获取信息

getInfo(url)

#获取索引页面url

pageurls = getAllPageUrls(url)

#遍历每一个索引页面,获取得到索引页面中内容页面的url并进行采集

#contents = []

for pageurl in pageurls:

contenturls = getContentUrls(pageurl)

for contenturl in contenturls:

if contenturl=='http://hxen.com/interpretation/bilingualnews/20111112/160028.html':

break

else:

content = getContentData(contenturl)

article2file(content)

#print(content)#估计有问题

print('抓取结束!')

def getPage(url):

try:

page = request.urlopen(url)

code = page.getcode()

if code < 200 or code > 300:

print('自定义错误')

except Exception as e:

if isinstance(e, request.HTTPError):

print('http error: {0}'.format(e.code))

elif isinstance(e, request.URLError) and isinstance(e.reason, socket.timeout):

print ('url error: socket timeout {0}'.format(e.__str__()))

else:

print ('misc error: ' + e.__str__())

return page

#获取信息

def getInfo(url):

page = getPage(url)

rawdata = page.read()

numofarticles = re.findall(numofarticle_regex,rawdata)[0]

numofpages = re.findall(numofpage_regex,rawdata)[0]

print('文章总数为:%d'%int(numofarticles))

#print('第一页页面数目为:%d'%int(numofpages))

return

#获取所有的索引页面

def getAllPageUrls(url):

page = getPage(url)

rawdata = page.read()

numofpages = re.findall(numofpage_regex,rawdata)[0]

inumofpages = int(numofpages)

pageurls =['http://hxen.com/interpretation/bilingualnews/index.html']

for x in range(2,inumofpages+1):

pageurls.append(r'http://hxen.com/interpretation/bilingualnews/index_%d.html'%x)

print('索引页面URL:')

for x in pageurls:

print(x)

return pageurls

#获取索引页面中内容页面的url

def getContentUrls(url):

page = getPage(url)

rawdata = page.read()

rawcontenturls = re.findall(contenturls_regex,rawdata)

contenturls = []

for url in rawcontenturls:

contenturls.append(r'http://hxen.com%s'%url.decode('gbk'))

print('获取的内容URL为:')

for url in contenturls:

print(url)

return contenturls

#采集页面数据

def getConten

补充:Web开发 , Python ,